Blogs

The latest cybersecurity trends, best practices, security vulnerabilities, and more

The Importance of Asking the Right Question

By Rob Cappiello · May 24, 2023

Prompt engineering is a new term for most of us, but the role and its functions have been around for quite some time. Prompt engineering is a term used in the field of Natural Language Processing (NLP), which involves teaching machines to understand and generate human language. For me, it’s asking the right question for a desired response. When we talk about "prompt" in NLP, we're referring to a set of instructions or cues given to a machine learning model to guide it in generating a specific kind of output. The prompt is like a question or a fill-in-the-blank statement that the model completes based on its training data.

Prompt engineering is the process of creating these prompts in a way that produces the desired output. This involves understanding the capabilities and limitations of the model being used, as well as the characteristics of the data that the model was trained on. Effective prompt engineering can make a big difference in the performance of an NLP model, as it can help to ensure that the model generates accurate and relevant outputs. It's an important skill for anyone working in the field of NLP, from researchers to developers to data scientists. To give an example, I’d like to reference a simple thought that our esteemed colleague Josh Stella, Trellix VP of Cloud Architecture, had said to us, “LLMs have the answer, it’s the question that you need to understand.”

Here is how you define what a good prompt is versus a bad prompt. Let's say we have an NLP model that has been trained to generate movie recommendations based on user input. The model has been trained on a dataset of movie titles, synopses, and user ratings. A good prompt for this model might be: "Please recommend cult classic action movies suitable for kids that grossed less than 50 million USD and featured scenes with helicopters.” This prompt is specific, relevant to the task at hand, and provides a clear context for the model to work with. It prompts the model to generate recommendations based on the user's preference for action movies, which is a relevant feature for the model to consider. A bad prompt for this same model might be: "Tell me something about movies." This prompt is too vague and doesn't provide enough information or context for the model to generate a meaningful response. It's not clear what the model should focus on or what kind of output is expected. In general, a good prompt is one that provides clear and relevant information for the model to work with, while a bad prompt is one that is too vague or unrelated to the task at hand. Next, to put good use to our prompting example, we will use ChatGPT as our preferred NLP model.

Use Case #1: Having the AI Categorize your 1,000 integrations

As a Product Manager, we are inundated with a wide variety of topics that involves frequently context switching and the eventual grunt work to produce an output for our internal teams and external customers. By using Large Language Models (LLM) more frequently in our roles, we will start to understand how prompt engineering enables not only us to function with speed, but also creatively generate content and innovations faster to our customers.

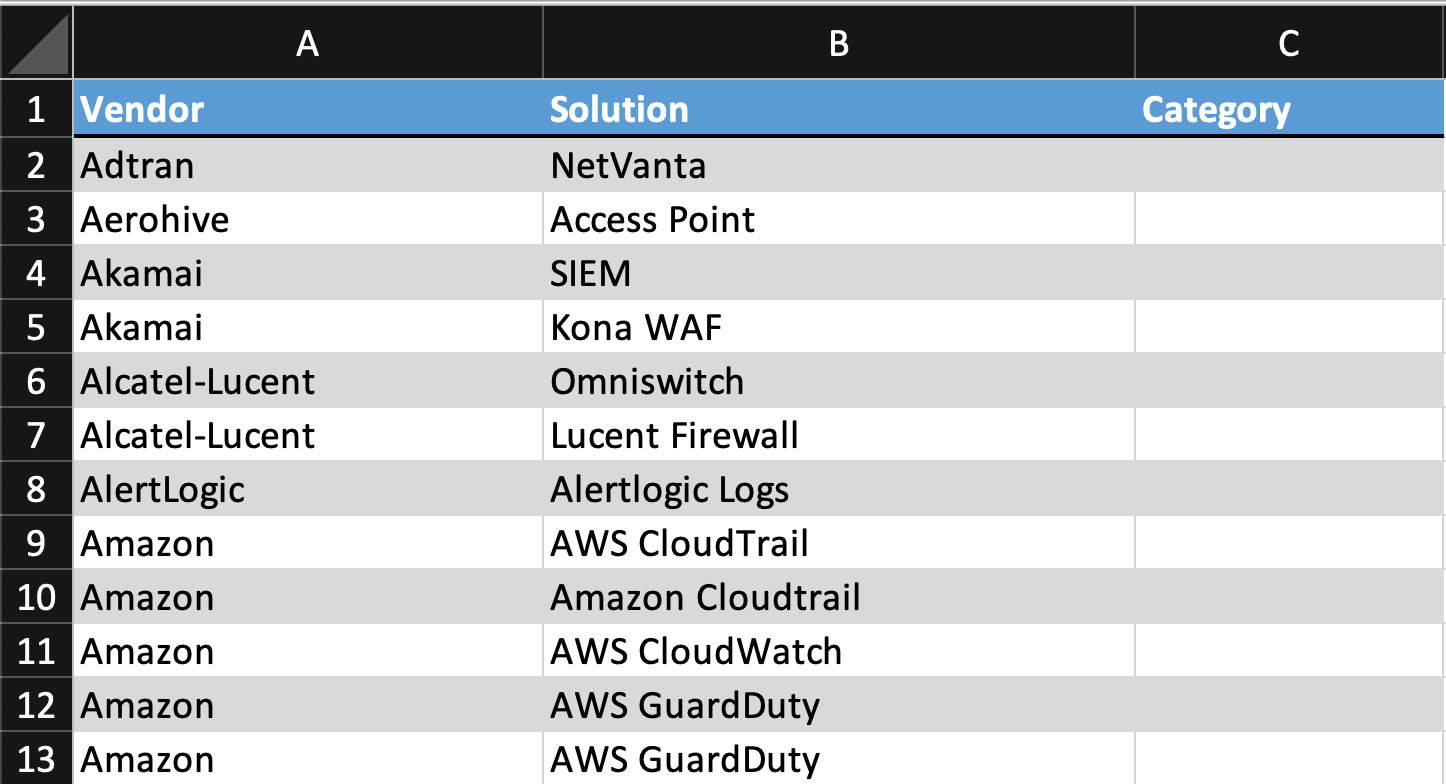

Recently I was tasked to place an industry category against each of our 1,000 integrations Trellix has amassed in over 5 years. These 1,000 integrations were filled out in columns containing the name of the vendor and the name of their solutions, which left us with a blank value for the category column. Having a searchable category would make it easier for our Trellix XDR customers to find the solution by the type of deployment they currently have.

“I tried doing this manually for about an hour and got about 15 solutions before I realized that this was a perfect use case for an LLM.”

It’s a simple task, but very time-consuming and tedious to look at each solution one-by-one off their product pages. In fact, I tried doing this manually for about an hour and got about 15 solutions before I realized that this was a perfect use case for an LLM. Rather than spending what could be a full work week of solution to category mapping, I trained ChatGPT to provide the result Trellix was looking for.

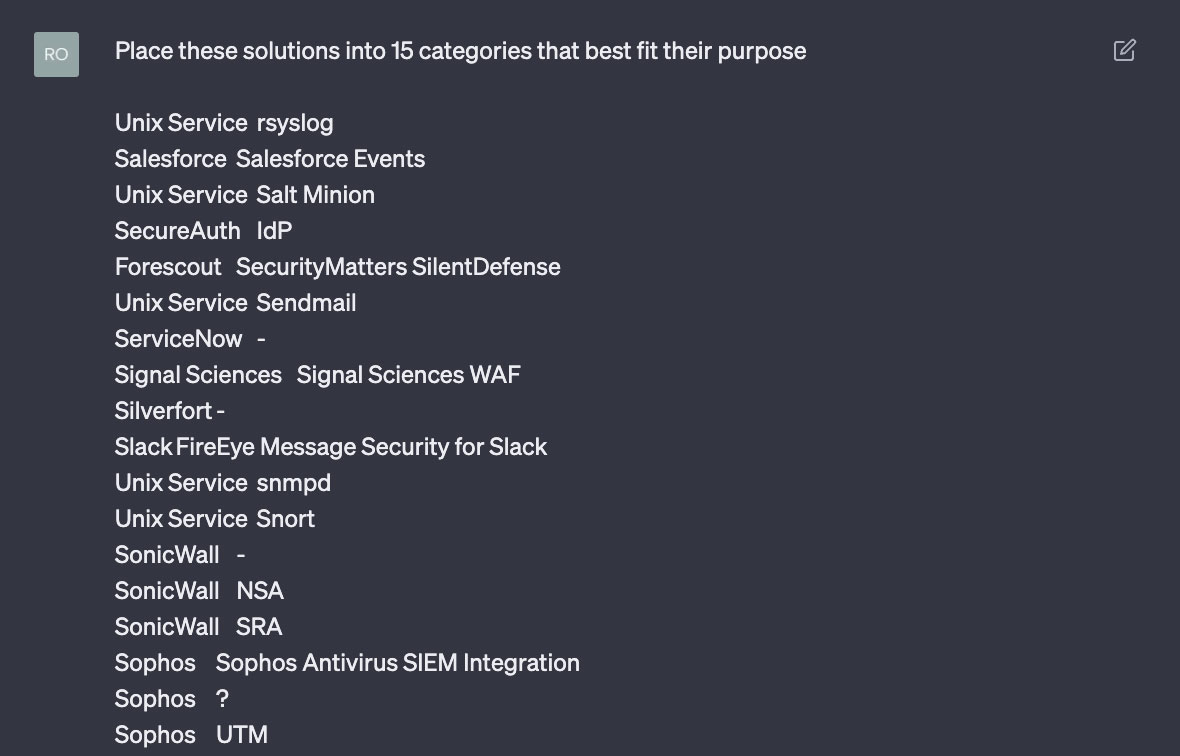

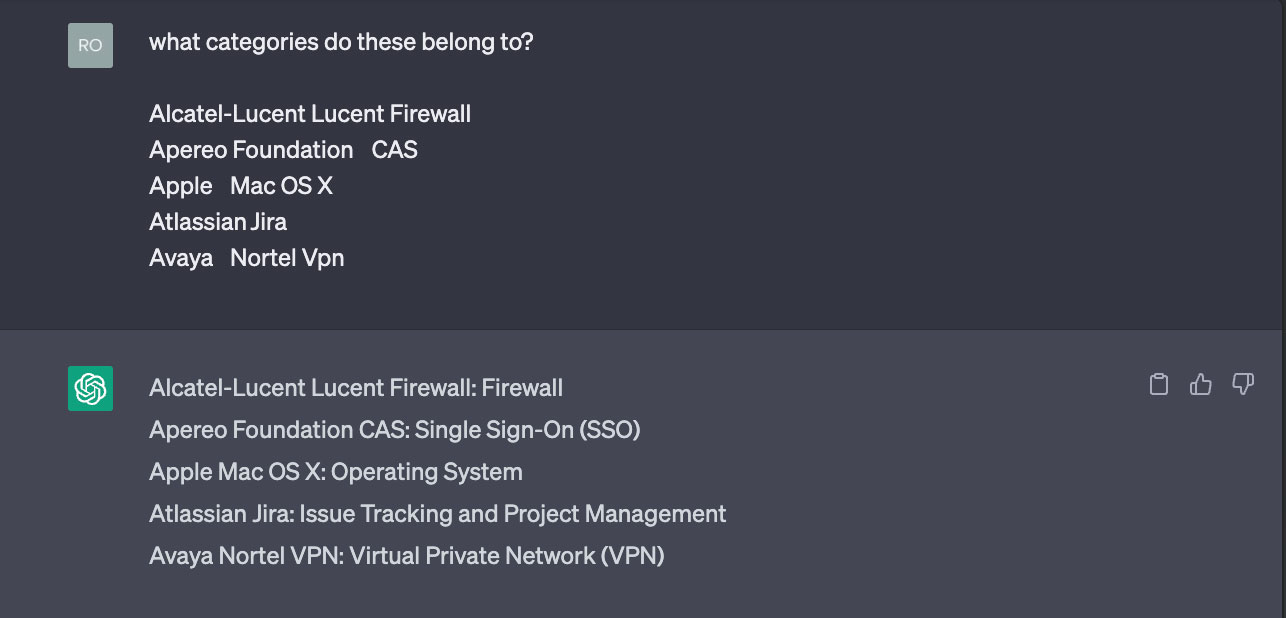

As the first example of prompt engineering, I tried to get ChatGPT to define the categories and keep them limited in number, so our customers could search for broad terms and get mostly what they needed.

The output was less than desired. It listed out the categories and then placed the solutions that best matched it. If I stopped here, it would have accomplished very little, because I still had to find each solution in a spreadsheet and manually type it in.

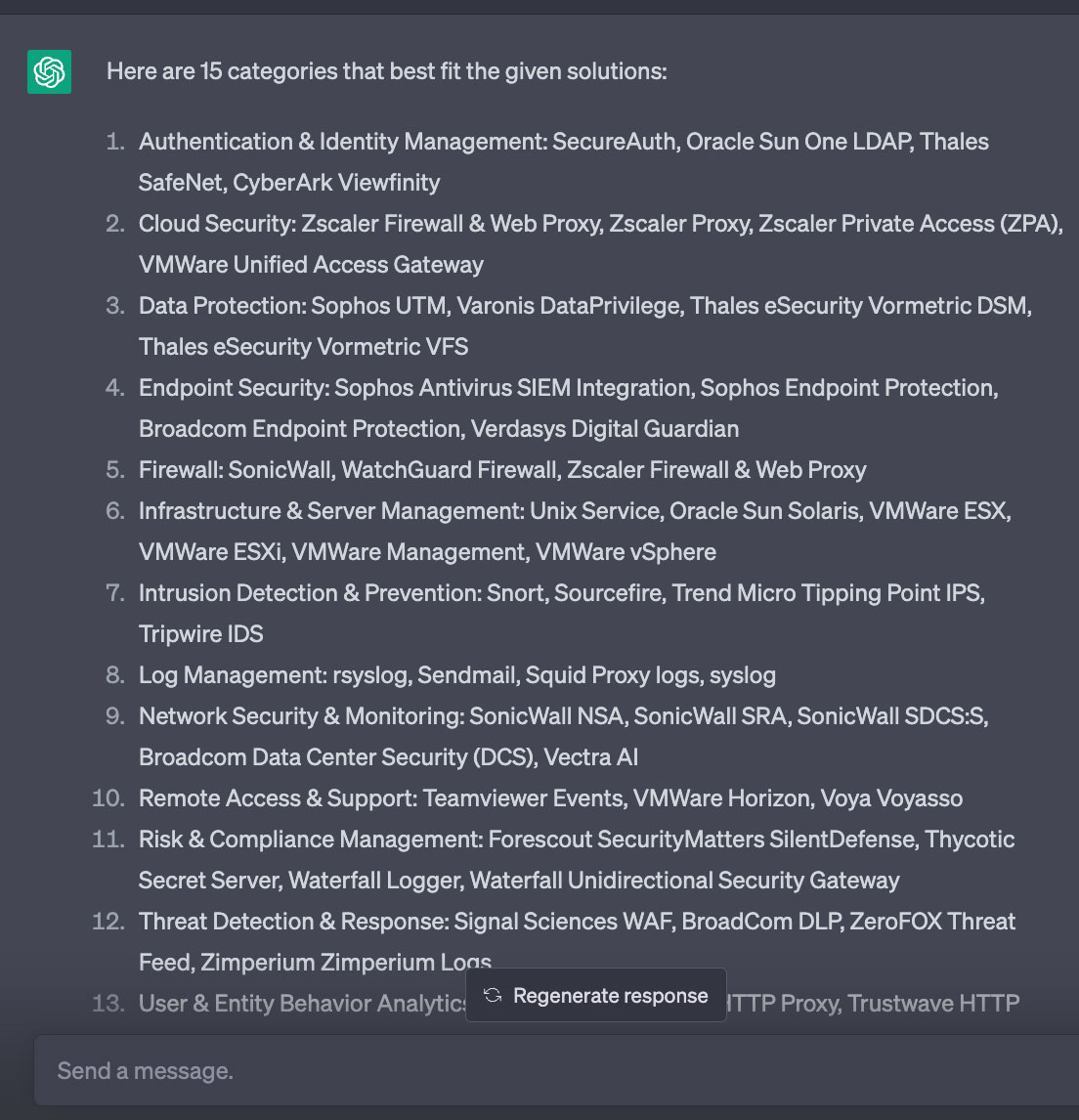

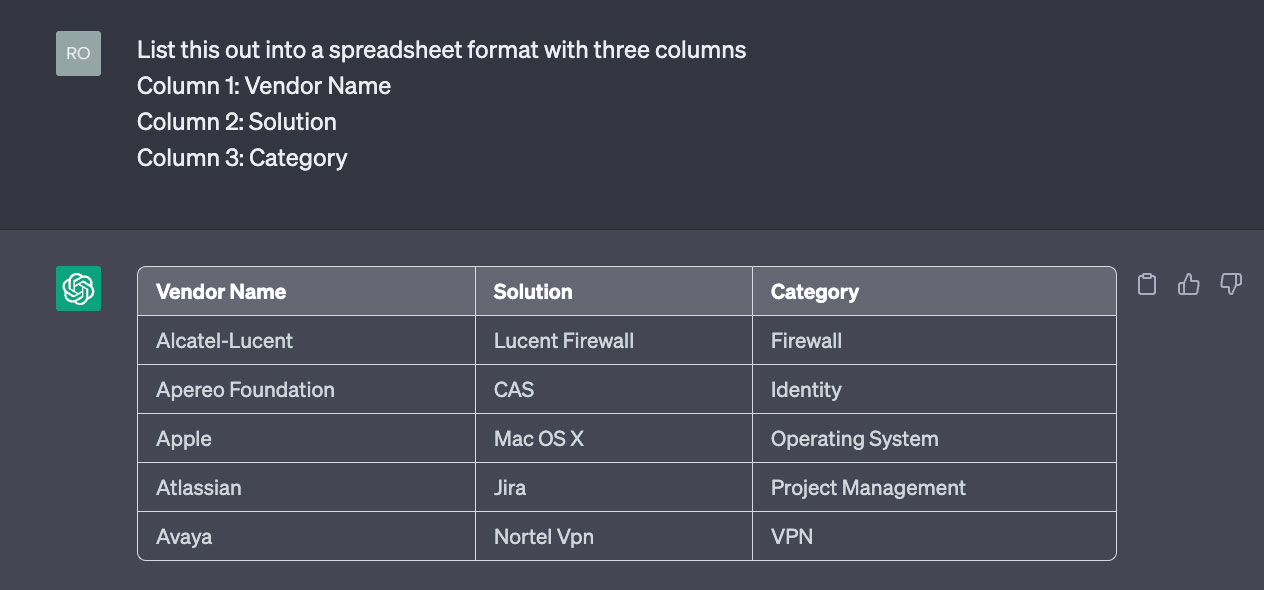

So I trained ChatGPT to format the results so I could align the results into the same spreadsheet.

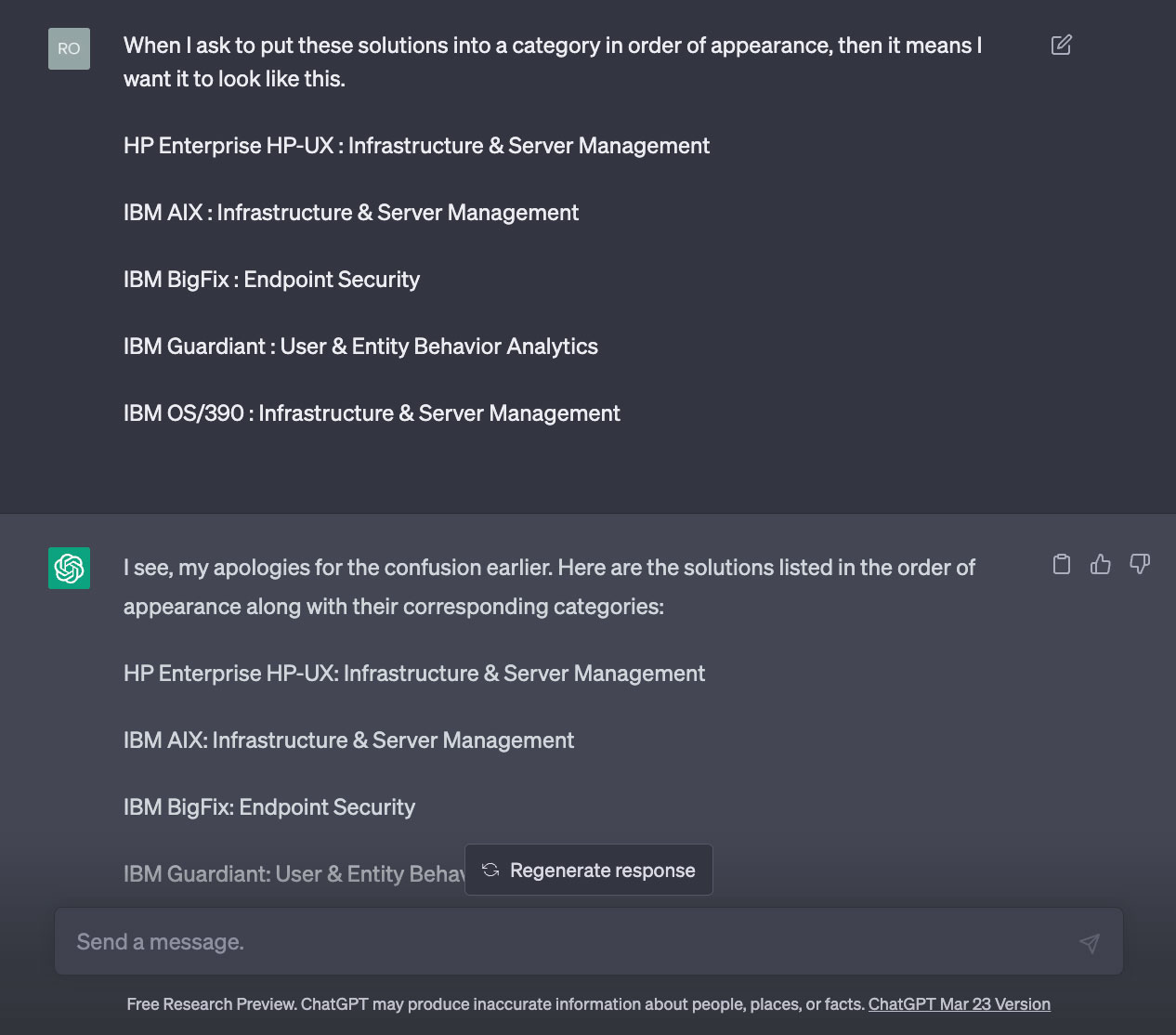

ChatGPT was now smart enough to understand the format I was looking for. Therefore, we prompt it again with a new set of vendors and solutions.

Unfortunately, this still required me to manually type in the results into our spreadsheet, so again, the right questions will lead you to the response you want. I prompted it to create it in a spreadsheet format based on the column titles.

…and there you go! Do all the categories make sense? Not all, so you have to double check. However, it’s much easier to quickly re-verify and re-assign when the data exists versus a blank cell.

Now we have a result that was ready to copy and paste into a spreadsheet and shared to our online web team, so our XDR customers had a filtered and defined list of the 1,000 integrations based on solutions and categories they aligned too. A 30-minute AI training session saved Trellix 60+ hours of manual, tedious labor to output a customer facing integration list.

In our next series, I will show how our Marketing team is running Use Case #2 to generate release announcements.

RECENT NEWS

-

Dec 16, 2025

Trellix NDR Strengthens OT-IT Security Convergence

-

Dec 11, 2025

Trellix Finds 97% of CISOs Agree Hybrid Infrastructure Provides Greater Resilience

-

Oct 29, 2025

Trellix Announces No-Code Security Workflows for Faster Investigation and Response

-

Oct 28, 2025

Trellix AntiMalware Engine secures I-O Data network attached storage devices

-

Oct 23, 2025

Trellix CyberThreat Report Reveals Blurring Lines Between Nation-State Espionage and Financially Motivated AI Attacks

RECENT STORIES

Latest from our newsroom

Get the latest

Stay up to date with the latest cybersecurity trends, best practices, security vulnerabilities, and so much more.

Zero spam. Unsubscribe at any time.