Blogs

The latest cybersecurity trends, best practices, security vulnerabilities, and more

Transforming Threat Actor Research into a Strong Defense Strategy

By James Murphy, Ale Houspanossian, Leandro Velasco LV and Ilya Kolmanovich · November 14, 2024

What does it take to transform threat actor research into detection engineering? If we look at threat intelligence at its core, then we focus on studying and deeply understanding the behaviors and actions of threat actors. How then, do we go from studying the ever-evolving malicious behaviors through to enhanced detection efficacy, and finally a practical implementation of defenses for Trellix customers?

This blog aims to switch the focus slightly, looking inward at how Trellix itself leverages the incredible work done by the Advanced Research Center (ARC) and our Product Engineering teams to improve the overall efficacy of our products. The end result is stronger defenses for our customers, and here we'll look at how threat intelligence is consumed by Trellix's own detection engineering teams.

We’ll also look at how Solutions Engineers take these products and work directly with customers to design defense strategies based on the unique requirements and characteristics of each.

Phase 1 - Understanding the ransomware landscape: Trellix's Advanced Research Center uncovers actor TTPs, tools, commands and more

ARC is Trellix’s specialized research unit that focuses on identifying and analyzing emerging threats. This research and analysis is critical in enhancing Trellix’s detection efficacy across ransomware, malware, threat actor tactics, vulnerabilities, and more.

Our ARC team performs a huge amount of research and analysis, so it’s a challenge to distill all of the value down into a single blog post! We’ll focus on two key areas here: intelligence gathering and analysis, and innovation and continuous improvement.

Intelligence gathering and analysis

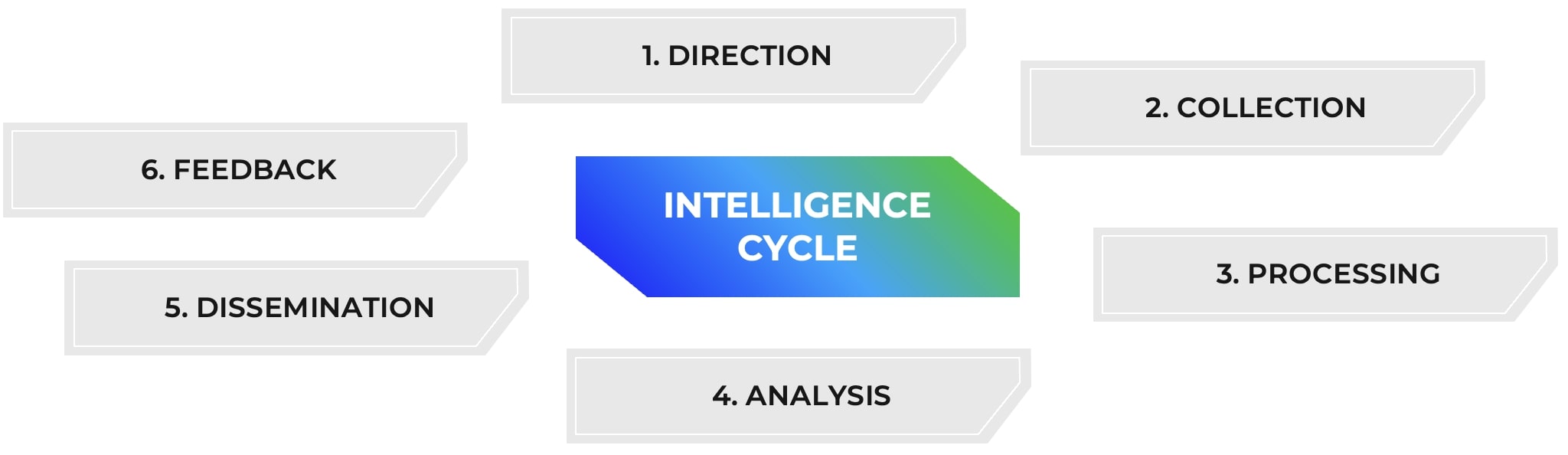

The intelligence cycle

It would be remiss not to start with the intelligence cycle. The intelligence cycle exists to ensure a structured, repeatable process that takes raw data and turns it into actionable insights. It also ensures consistency, accuracy, and relevance.

The threat intelligence cycle typically has six parts (or five, with some models omitting the feedback step seen above). Each step ensures that the intelligence generated by Trellix ARC follows the same high standard of quality across everything we do. It would be too lengthy to describe it in full in this blog, but you can see here for a more detailed breakdown of the steps.

This process covers the monitoring and analyzing of vulnerabilities, threat actors, and malware campaigns, as well as extracting, vetting, normalizing, and labeling data before documenting the findings and integrating them with Trellix’s internal telemetry sources.

Malware analysis

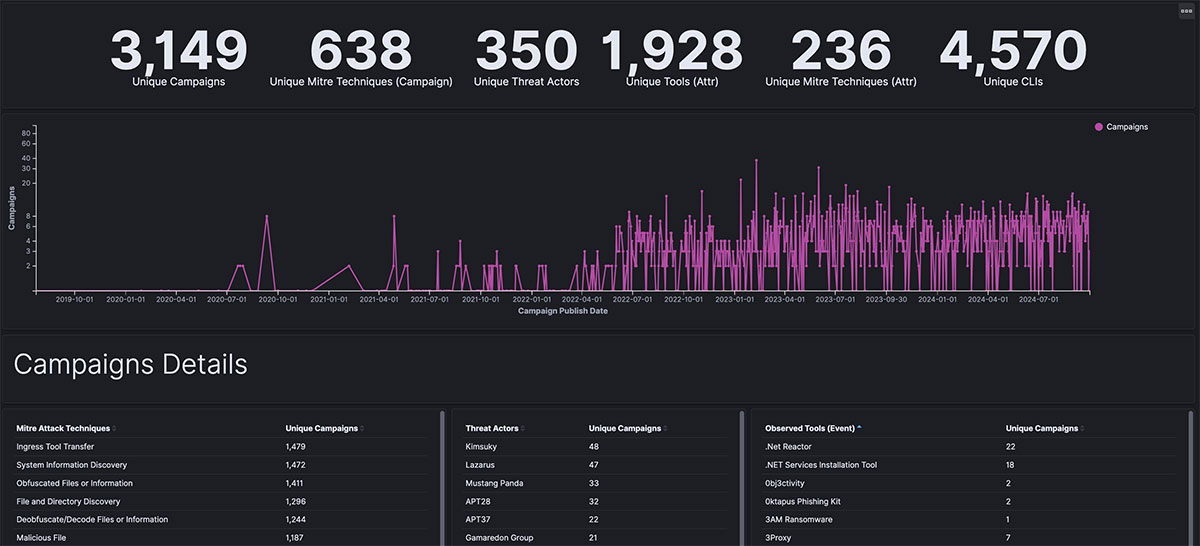

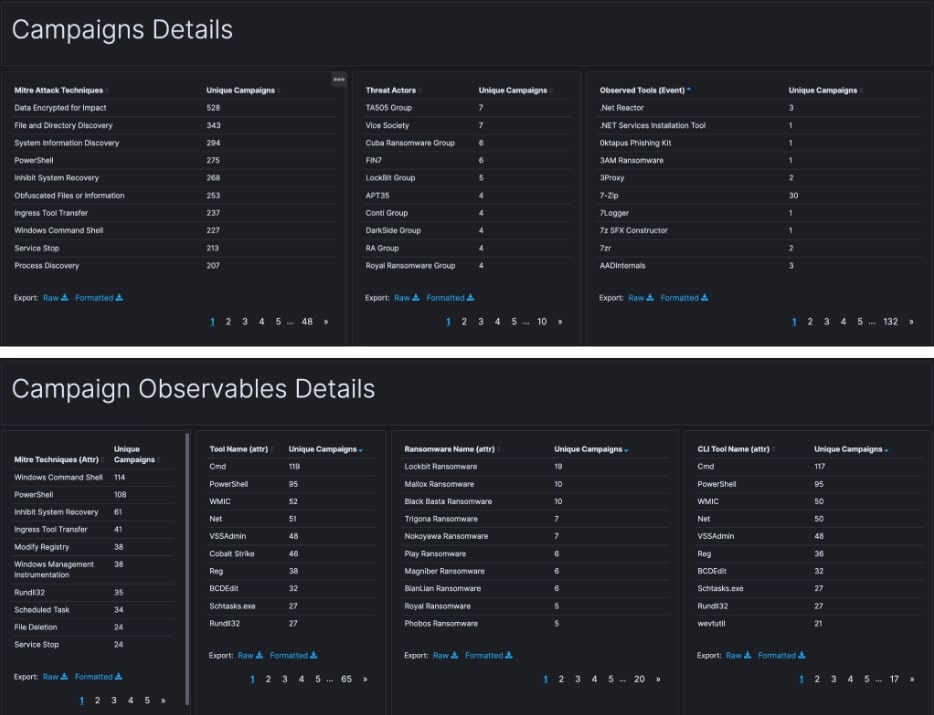

Trellix ARC collects information on a huge range of threats - more than we can cover here, but we employ a range of techniques and technologies to collect and curate intelligence. The culmination of this work can be seen not only in the improved detection efficacy of Trellix products, but in our threat intelligence tools like Trellix’s Advanced Threat Landscape Analysis System (ATLAS), where we can see the Campaign TTPs dashboard:

At the core of our operations is Trellix’s malware analysis program. YARA rules are implemented and maintained to monitor malware samples, which is followed by in-depth behavioral analysis as new malware families and strains evolve and improve. Malware analysis is not a simple process, requiring not only specialized skill sets, but specific technologies as well. Here, ARC uses tools like Ghidra for Golang malware analysis, with plugins that are fine-tuned and maintained by the team.

Sometimes existing tooling doesn’t fit all use cases, such as having simple access to in-memory artifacts on disk for .NET malware. Trellix ARC developed DotDumper in-house to solve this, allowing analysts to dump the artifacts, and speeds up what is a tedious process which consumes a disproportionate amount of analyst time. This allows our analysts to focus on uncovering new threats and interesting campaigns, rather than mundane, generic and widely-spread malware. We’ve also made it freely available - check it out here on our GitHub.

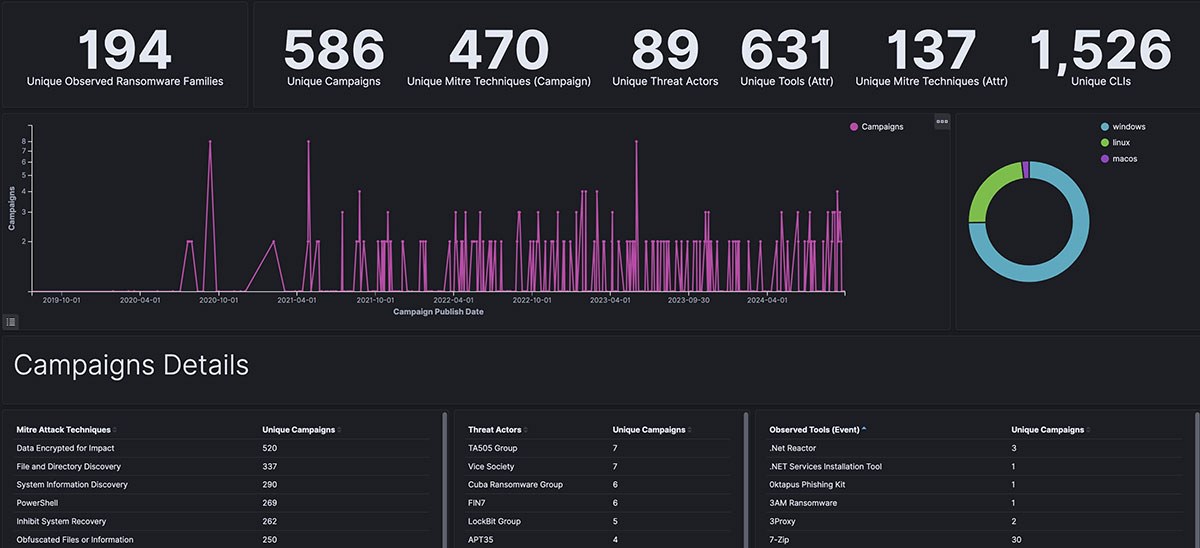

Ransomware, threat tracking and adversary intelligence

Trellix ARC collects extensive information on ransomware groups, threats, and adversaries. Bringing in this data involves monitoring and analyzing information from many sources, so automation and intelligent workflows are required to not only take the load off the analysts, but enable analysts to collect more thorough information quickly.

To assist in the collection of information on ransomware groups, threat actors, and specific threats (such as Gootloader and Cobalt Strike), analysts leverage automated workflows. These workflows help to automate monitoring of activity related to groups and threats, and allow us to identify emerging threats and their evolving TTPs.

Surveillance of underground communities is pivotal to ensure we catch a wide range of criminal activity. Ransomware leak sites are a valuable resource to understand ransomware operators, their victims, and to monitor how their trends and behaviors change over time. Dark web forums and other communities (such as Telegram) are an extremely popular place for cyber criminal trade, allowing us to capture what is being advertised, which operators are prevalent, what organizations they are targeting, and so much more.

Fascinatingly, dark web and threat actor communities often draw parallels with regular life, with geopolitics, business disagreements, ideological differences, and leadership struggles all playing their part. This context is useful in monitoring broader cyber criminal trends alongside efforts made by law enforcement organizations.

Threat hunting

Finally, threat hunting is our answer to the ever-changing threat landscape. We leverage this valuable source of information to review malicious activities by reconstructing attack chains that threat actors designed to achieve their nefarious goals. This approach assists in identifying campaigns as they unfold. Because Trellix has hundreds of millions of sensors deployed globally, we’re able to track threat actor and malware activity across this telemetry, and correlate with other sources (like MISP), providing richer and contextualized detection feeds.

Extracting MITRE ATT&CK tactics, techniques and procedures

One of the many outcomes and ways to visualize the efforts of Trellix ARC is the Ransomware TTPs dashboard and the Campaign TTPs dashboard (that we mentioned earlier) in Trellix ATLAS:

Beyond high-level TTPs, the efforts of ARC also culminate in deep-level technical intelligence, such as specific tools, code, and command line strings that actors are developing. Tracking and analysis of this content helps us understand trends around threat groups and how they evolve - for example, sometimes we see sections of code used by emerging groups that we’ve formerly seen used by now-defunct groups, helping us to understand the origins of these groups.

Innovation and continuous improvement

We’ve explored the breadth and depth of Trellix ARC’s intelligence gathering processes, but collection is just one step of the process. ARC’s other key goal is to ensure that the data we collect is thoroughly cleaned up and correlated with other information to provide the fullest context possible.

Correlation, context, and technology

We achieve this broadly by performing advanced correlation, contextualization and optimization of the information and telemetry gathered. First, this helps us to reduce the noise around large telemetry data sets, and improves the integration of the telemetry into our threat intelligence knowledgebase. From here, correlation and contextualization of the information is enabled by Trellix ATLAS, which correlates information from our MISP events with selected sources of telemetry.

Like a lot of great tools, ATLAS was born out of necessity. Developed in-house by the ARC team, it provides richer detection information and enhanced tracking abilities. This is hugely useful for both our threat intelligence and detection engineering teams, allowing us to monitor threat actors, their activities, and their evolving TTPs quickly, and ensuring better detection coverage across our products.

In addition to solutions like DotDumper and ATLAS, Trellix ARC continues to innovate. We leverage automation and generative AI for data enrichment, analysis and presentation of intelligence. We also conduct advanced reverse engineering research, particularly for .NET- and Golang-based malware.

Continuous process improvement and comprehensive documentation

Rounding off the intelligence section of this blog is how ARC ensures the core things are done correctly and consistently: improving our processes wherever possible, and in-depth documentation. Various process improvements include data labeling, correlation, intelligence presentation, quality and accessibility enhancements, and more.

The intelligence knowledge base contains all of our findings, which are meticulously documented, and serves as a comprehensive repository of threat actor behaviors, tactics, techniques and procedures. This, along with our blogs, threat reports, MISP events, and information generated by ATLAS, all form crucial elements of our contribution to the cyber security community.

Phase 2 - Enhanced protection: EDR team takes the key information, transforming it into detection rules

Modern cyber threats have become more sophisticated and have multiple stages. Adversaries model these with the attack chain in mind, focusing on evading defenses and blending in with common activity. To achieve this, attackers leverage legitimate software, including admin tools, hacktools, command line interfaces, and script interpreters. Because of the broader evolution in adversary TTPs, we can no longer depend on traditional detection methods that rely solely on IOCs like file hashes or IP addresses.

Detection engineering for Trellix’s EDR team is centered around two key steps. The first is to understand adversarial TTPs and identify suspicious or common behavioral patterns. This is enabled by Trellix ARC’s comprehensive threat intelligence capability that we’ve already explored.

The second step is for the EDR team to create, test, deploy, and maintain a base of detection rules and models. Just as threat actors innovate, we must do the same, and as rapidly as possible.

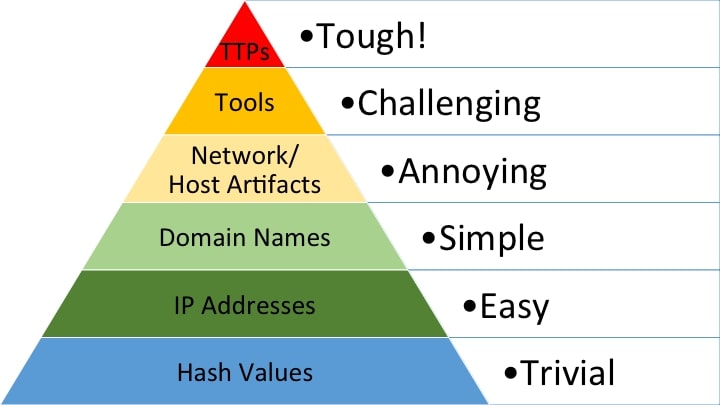

Pyramid of Pain

Above all, the detection engineering process focuses on impacting the adversary at the very top of the Pyramid of Pain. The Pyramid of Pain is a conceptual model that illustrates the increasing difficulty and impact caused to adversaries when defenders detect and disrupt different aspects of their operations.

At the bottom there’s “Trivial” and “Easy”, for hash values and IP addresses. What this means is that while we can block a file hash or IP address, it’s incredibly easy for an attacker to modify these - such as modifying one single bit in the malware, or changing servers to one with a different IP address. They can simply continue their attack.

However if we look at the top, we see “Tools” and “TTPs”. We commonly see multiple threat actors rely on the same tools, and that’s usually because that tool is effective at helping the actor achieve their goals.

A great example is the dual-use tool AutoIt, which helps attackers simplify their development processes (which we wrote about here). If we manage to identify block actions related to that tool, that method is no longer effective for the attacker, and it is challenging for them to adjust their process.

TTPs take it even further - if you can neutralize the overarching methods of the attacker, then it’s tough for them to change! An example of this would be an EDR tool detecting and preventing unusual activity related to LSASS.exe, or stopping tools like Mimikatz. This would help disrupt a credential dumping activity where attackers are attempting to extract passwords from systems (MITRE ATT&CK - Credential Access TA0006: OS Credential Dumping T1003).

With this in mind, Trellix’s detection engineering processes target the top layers of the pyramid, to provide solid defenses to customers that make life difficult for cyber criminals.

Threat-informed detection engineering

Achieving all of this is not simple, so it requires a methodical approach. Let’s step through the approach here.

First, it’s question time. What is the goal of the adversary? What type of threat are they posing? Is it an Infostealer? Is this a Remote Access Trojan (RAT), or are we seeing activity from a new C2 framework? Asking a myriad of questions allows us to collect the relevant context around the threat, and start unpacking the details.

Then, the EDR team identifies the key behaviors and TTPs of the adversary. Understanding the approach of the adversary in trying to achieve their goals, along with what tools they are using, and the nature of these tools, all helps to start building out a detection strategy.

Trellix ATLAS is a good starting point to gather observables from our threat intelligence base, for the engineering team to start crafting new detection logic. Digging into campaign observables allows the EDR team to delve deeper and see what is trending. For example, are we seeing an uptick in usage of AdFind by an emerging actor? Are we seeing a specific tool like LaZagne used by a lot of actors including RansomHub, APT33, APT34, APT35, and MuddyWater?.

A real-world ransomware example

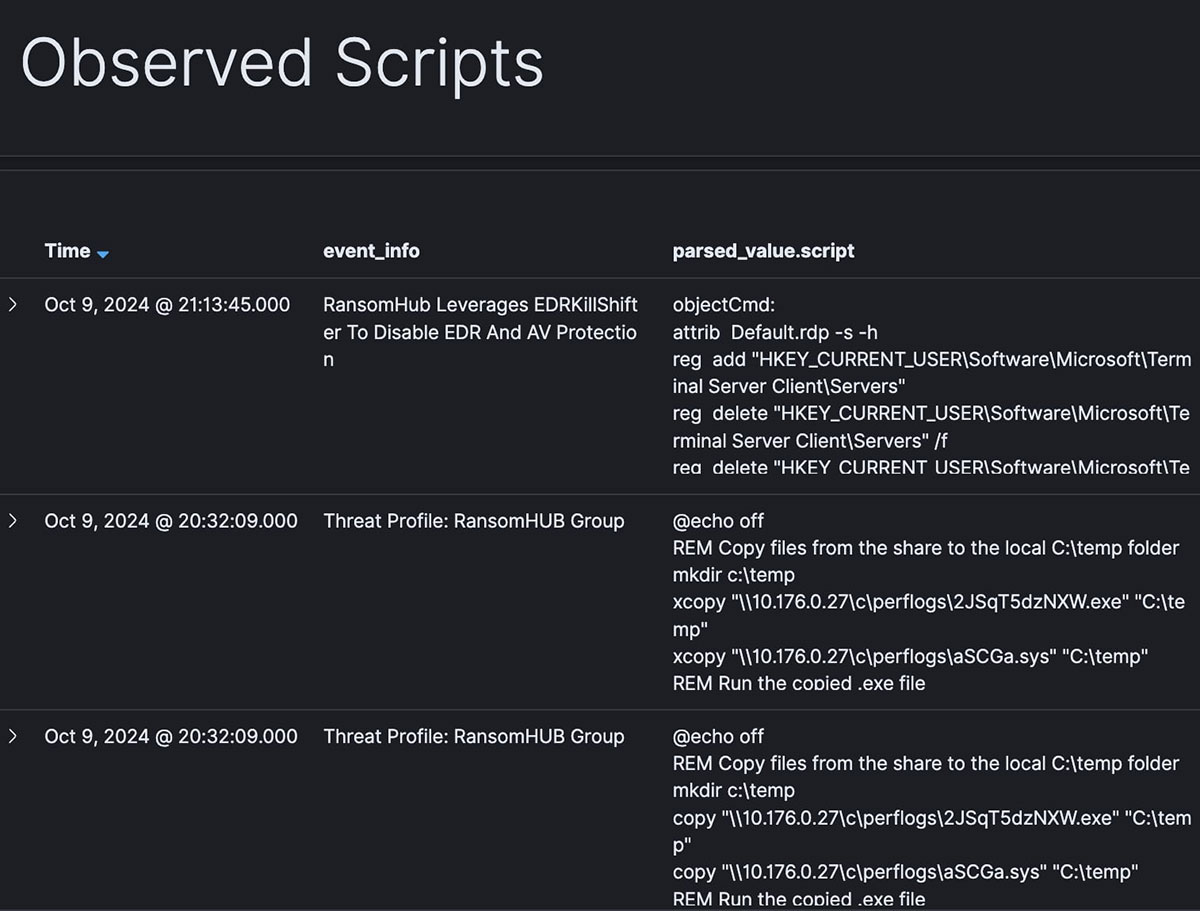

Let’s look closer at RansomHub, a group that has quickly become one of the most active ransomware gangs. We can dig in and see both high level detail about tactics, as well as more direct technical information, like tools and specific command line strings used by the group.

Below, we can see the scripts we’ve collected that are associated with RansomHub, and we see a reference to “EDRKillShifter”:

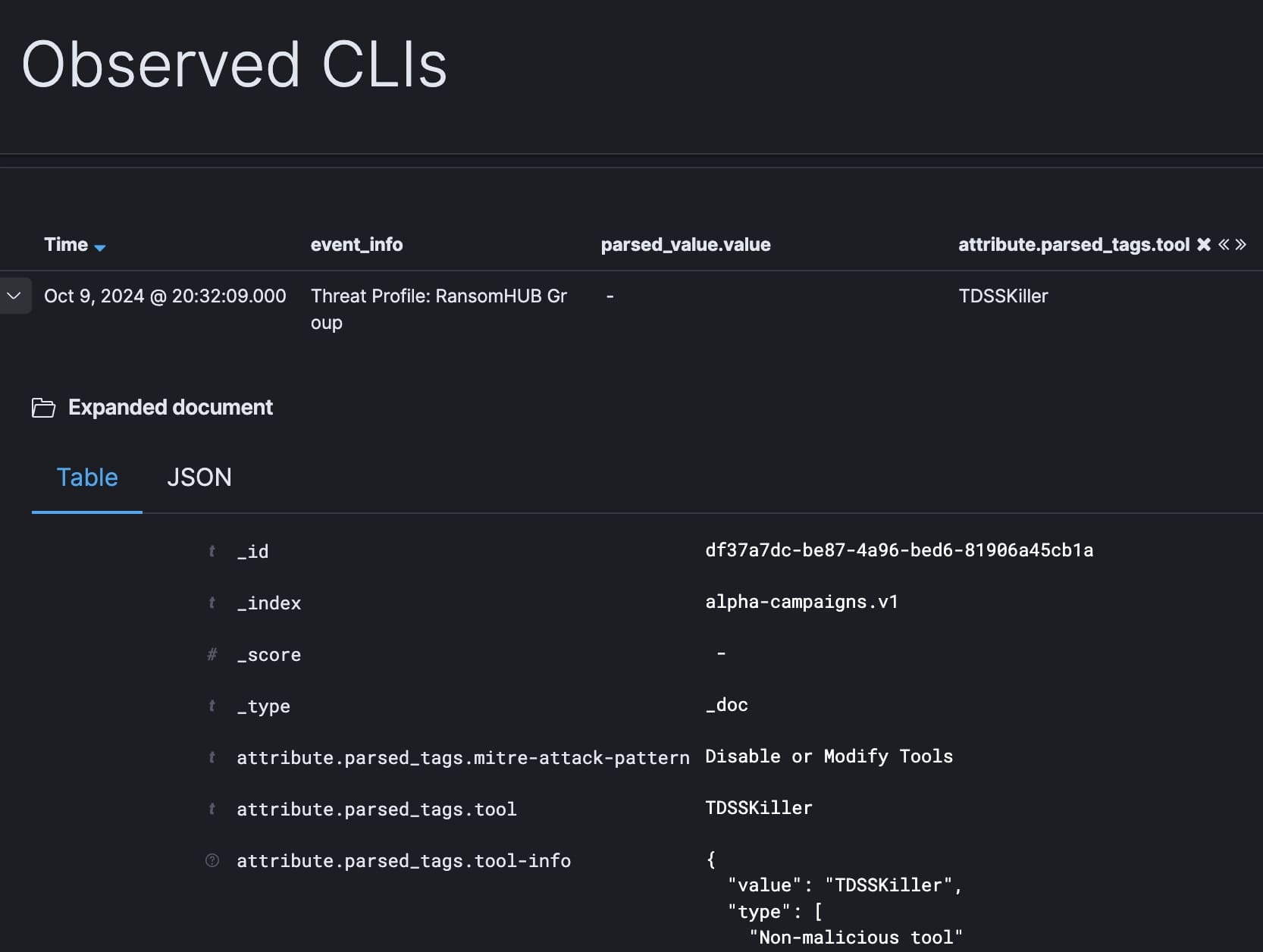

We can also see the specific observed command line strings known to be used by RansomHub. Immediately we can see a mention of “TDSSKiller”:

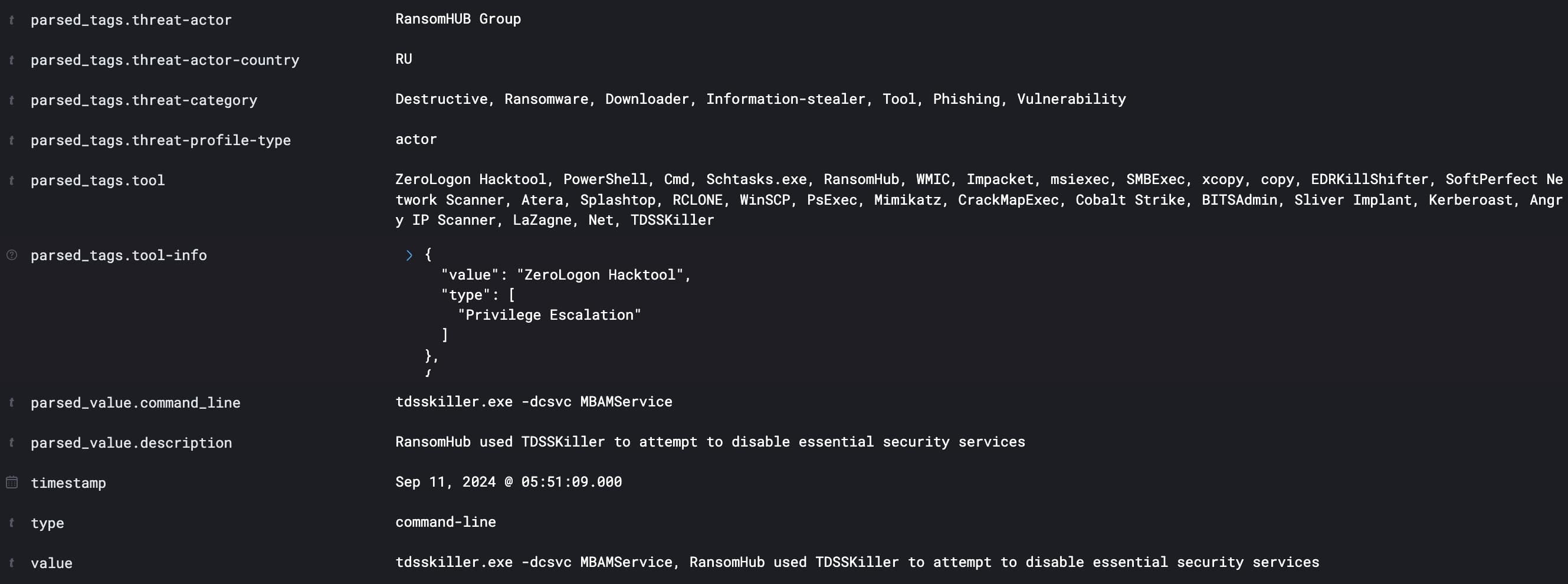

Digging in to the command further, we also see a reference to “Zerologon”:

Now we’ve got three points of interest:

- Zerologon: a vulnerability that affects Microsoft’s Netlogon, and allows an attacker to gain domain administrator privileges

- TDSSKiller: a legitimate tool built by Kaspersky designed to remove rootkits, but abused by attackers to disable defensive detection measures

- EDRKillShifter: an “EDR killer”, designed to disable endpoint detection and response tools

Zerologon is a well-known vulnerability, so we’re certainly able to detect it with EDR tools. But what if the EDR tools are disabled by an attacker? The vulnerability is much more likely to go undetected, allowing the attacker to freely navigate a victim environment. That’s exactly the strategy of RansomHub!

This information can be used as a basis for new detection rules, and the EDR detection team follows a native detection-as-code approach. Through this, they implement best practices from the software engineering world, including code versioning, unit testing, peer reviews, continuous integration, and build level testing.

Once the initial detection logic is built, the team monitors the performance of it via telemetry analysis, and fine tunes the logic accordingly. This is done thoroughly before it is released fully to the production environment.

It’s through this process that threat intelligence can be generated, too. During the testing and tuning of the detection content, the EDR research team often discovers fresh campaigns, and IOCs from these campaigns are shared with the Trellix ARC team for further investigation.

Phase 3 - Building a customer defense strategy

The final step in transforming threat research into a defense strategy for Trellix customers is to build a security solution that suits each organization and the characteristics that make them unique. This is where Trellix’s Solutions Engineering team steps in.

Understanding the customer

As always, the customer is our first priority. Understanding the nature of your organization is key to designing a solution that suits best such as the specific industry, nature and size of security/technology teams, and broader business goals. It’s here that we’ll try to capture the needs and challenges, and really unpack the reasons for these with a consultative approach.

Every business has different drivers for security projects and of course, improving security across the board is a goal for everyone. Realistically though, there are a few other aspects that shape the procurement and implementation of security solutions. These often include:

- Regulatory requirements, such as compliance with HIPAA in the health industry, GDPR across Europe, or the Essential Eight in Australia

- Risk appetite: for example, financial institutions typically have a lower risk tolerance which drives them to make additional security investments

- Culture and capability: is the organization mature enough to implement certain controls, and will users be receptive to change?

- Budgetary concerns: does the organization have funding for this project?

- Internal skill set: can the security team realize the full benefit of this solution?

Solutions Engineers (SEs) need to do deep discovery with customers to understand their main drivers and limitations around their security goals. This process is not only about gathering information, but also understanding the different people involved in the project, and the challenges they have.

It’s tempting to simply ask the CISO what their challenges are, but they also need to rely on the people below them to be decision makers too. The CISO won’t necessarily see the day-to-day blockers or problems that the analysts and senior cyber staff are acutely aware of, so it’s our job as SEs to uncover these issues and understand what these staff want to see improved.

Understanding the threat landscape

To allow SEs to have these conversations with customers, they need to keep up-to-date with how attackers and their TTPs are evolving. This means lots of reading and research, as well as continuously learning from our customers and peers. Blogs and social media posts, especially those by practitioners and researchers, are useful to keep up with novel attacks, trends, victim types and the effectiveness of various defensive measures.

This knowledge needs to extend to specific industries - conversations we have with a healthcare company can be vastly different to those we have with a government department or a bank. Customers expect SEs to understand their specific security threats and build appropriate defenses.

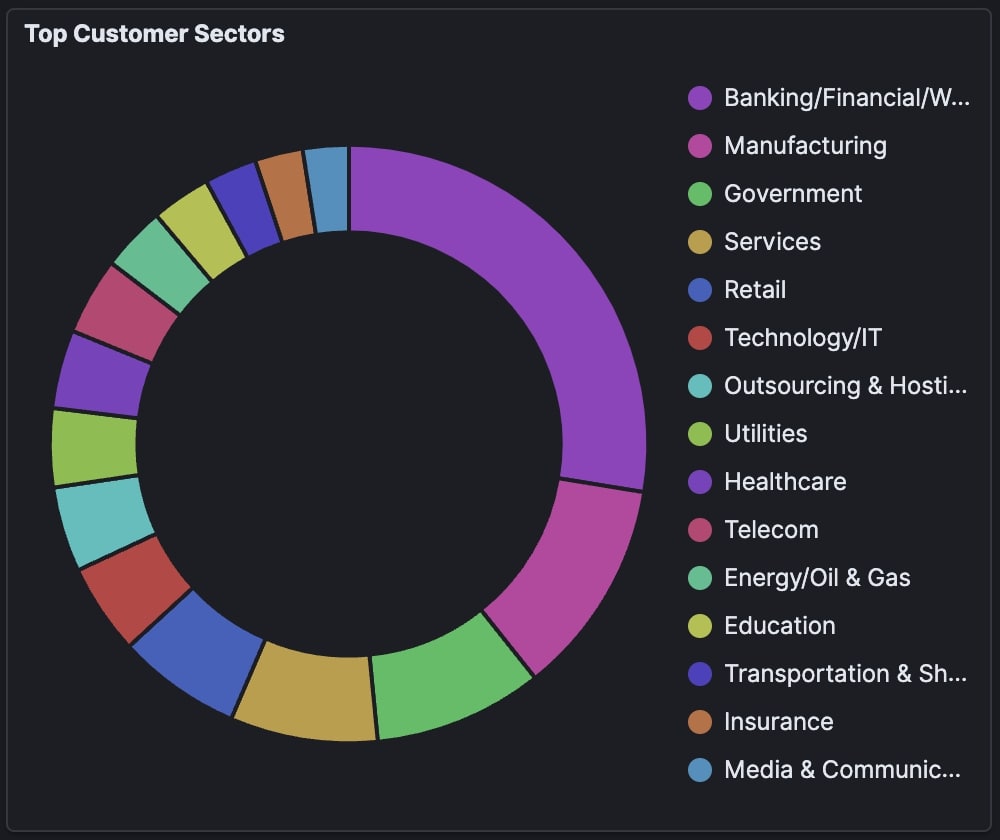

Working alongside Trellix ARC has its advantages too. With access to vast telemetry information through ATLAS, SEs are able to understand attack trends across industries and regions globally. For example, we can check the top affected customer sectors here:

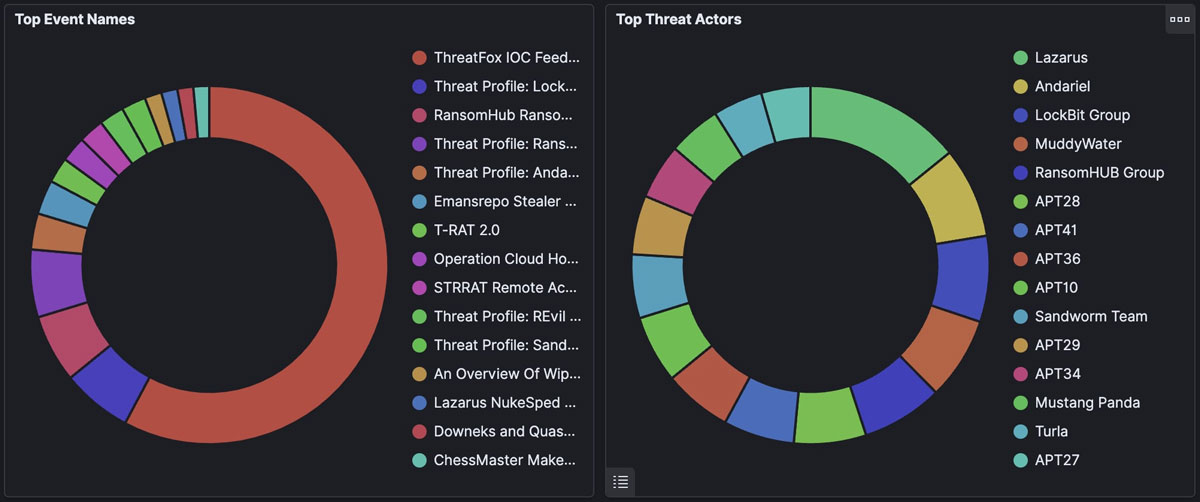

An SE speaking to a customer in Germany can see the top campaigns and threat actors for a given time period:

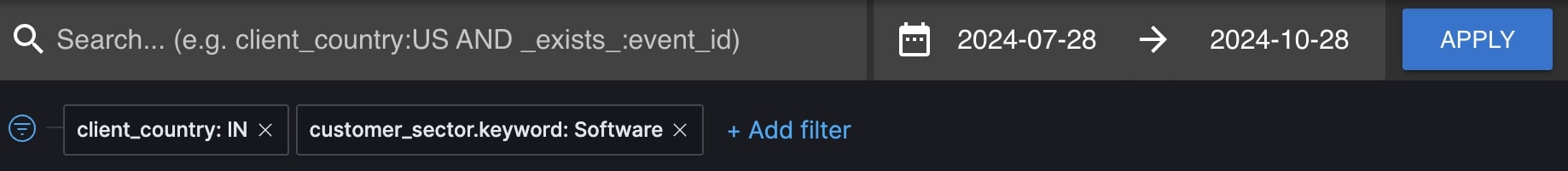

We can narrow it down as far as we wish, too. If we’re speaking to a software company in India, we can see all the key information from the last three months by using this filter:

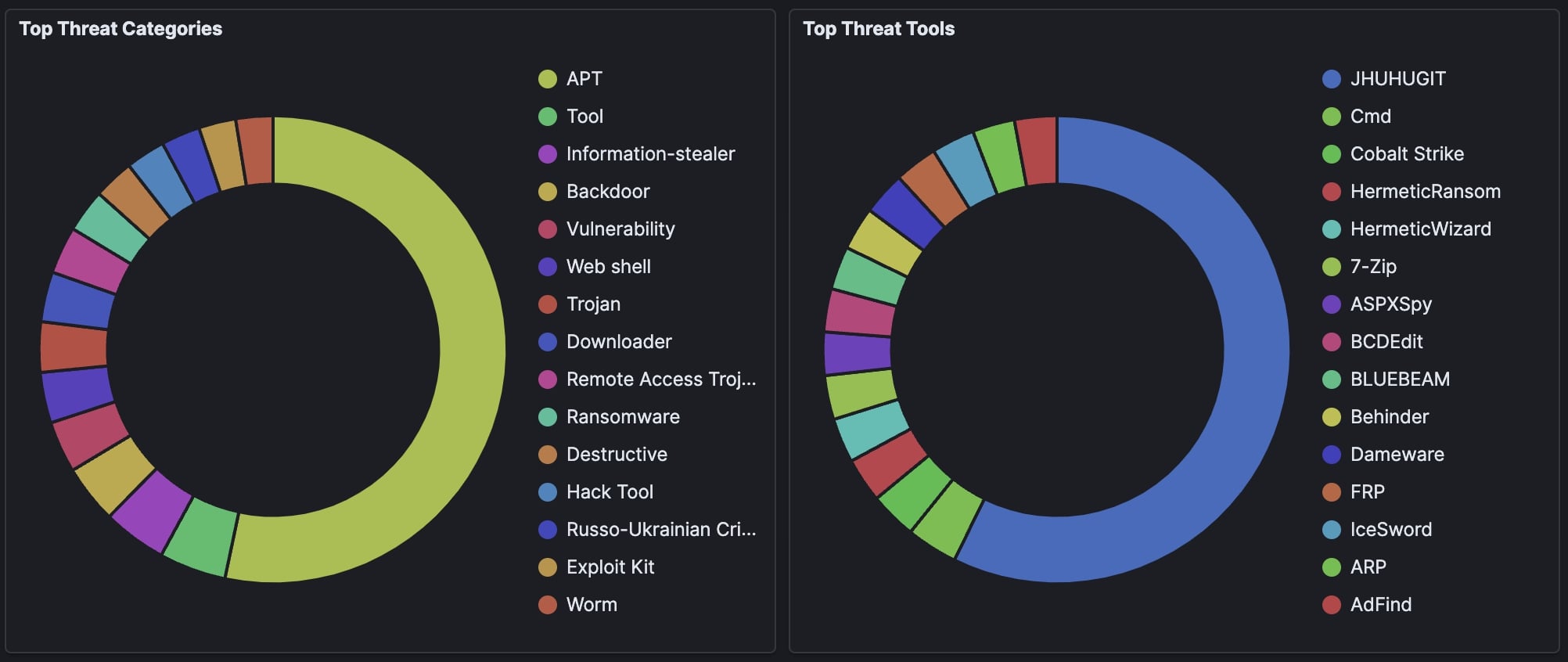

With additional context around top threat categories and attacker tools:

Trellix ATLAS is an analytical tool, but this gives us a good starting point to understand what a specific customer’s threat landscape looks like in recent months. We can also work closely with Trellix’s Threat Intelligence Group to understand in more depth.

Bringing it all together

SEs need to combine the business-focused conversation with the understanding of the threat landscape to build a defensive solution for customers. This is a careful balance: simply being technical is not enough - SEs must understand the customer’s business goals, limitations, and operational challenges to tailor a technical solution that meets them where they are today.

The SE will consider the existing internal tooling and architecture of the organization and build a solution using Trellix’s wide range of products to not only solve their immediate security concerns, but a platform that they can expand upon into the future.

Conclusion:

As this blog outlines, a lot of research and effort goes into transforming intelligence into effective detection, and into customer defense strategies. A collaborative effort between research, intelligence, engineering and solutions engineering is required, and ensures improved detection efficacy across the board.

Through Trellix’s broad collection, research and analysis capabilities, we’re able to do more than generate intelligence, but turn it into practical solutions for our customers. In this blog we focused on detection engineering in our EDR tool specifically, but it’s important to remember that we use intelligence to build better detection logic across the entire Trellix product suite.

RECENT NEWS

-

Jun 17, 2025

Trellix Accelerates Organizational Cyber Resilience with Deepened AWS Integrations

-

Jun 10, 2025

Trellix Finds Threat Intelligence Gap Calls for Proactive Cybersecurity Strategy Implementation

-

May 12, 2025

CRN Recognizes Trellix Partner Program with 2025 Women of the Channel List

-

Apr 29, 2025

Trellix Details Surge in Cyber Activity Targeting United States, Telecom

-

Apr 29, 2025

Trellix Advances Intelligent Data Security to Combat Insider Threats and Enable Compliance

RECENT STORIES

Latest from our newsroom

Get the latest

Stay up to date with the latest cybersecurity trends, best practices, security vulnerabilities, and so much more.

Zero spam. Unsubscribe at any time.