Blogs

The latest cybersecurity trends, best practices, security vulnerabilities, and more

Using Data Loss Prevention to Prevent Data Leakage via ChatGPT

By Zak Krider · April 17, 2023

The rapid advancement of Artificial Intelligence (AI) technology has garnered much attention in recent weeks for its potential to enhance workplace productivity and efficiency. However, this focus on AI innovation has overshadowed the risks that come with such new technologies. AI services, such as ChatGPT and Google Bard, will begin to be used by cybercriminals to develop attacks against organizations, highlighting the potential cybersecurity risks that these services pose. On top of that, there has been little discussion about the risks associated with users accessing and exposing an organization's intellectual property (IP) or secrets through these services.

Although many AI services claim to not cache or use user-submitted data for training purposes, recent incidents with ChatGPT specifically have exposed vulnerabilities in the system. This can potentially result in the exposure of company intellectual property or user search prompts with responses, posing a significant risk to organizations. As a result, companies are increasingly concerned about how they can leverage AI services while safeguarding their sensitive data from being exposed to the public. This underscores the need for companies to assess the risks and benefits associated with AI services and to implement measures to protect their sensitive data.

To address these risks, companies must take a proactive approach to protect their sensitive data from being leaked through AI services. This may include implementing Data Loss Prevention (DLP) solutions that can monitor and safeguard data at the endpoint and network levels. Additionally, companies should educate their employees on the risks associated with AI services (just like any other 3rd party service) and the potential consequences of exposing sensitive data. By taking these steps, organizations can ensure that they are leveraging the benefits of AI services while mitigating potential risks to their sensitive data.

While AI services offer significant benefits to organizations, they also pose potential cybersecurity risks. Companies must carefully consider these risks and implement measures to safeguard their sensitive data from being exposed. By adopting a proactive approach to cybersecurity, organizations can leverage the benefits of AI services while protecting their most valuable assets.

Trellix Can Help Safeguard Your Data

New technology can introduce security gaps that can make organizations susceptible to accidental or deliberate breaches. As technology evolves, it is crucial for companies to have adequate security measures in place to safeguard their sensitive data. Trellix has a reputation for providing effective protection against potential threats, due to its extensive experience in the cybersecurity space.

You might be asking yourself how this can occur in your environment. With the efficiencies that these AI services bring users will be leveraging them more and more in their daily tasks. Just a few examples of how users might use these services while unexpectedly leak sensitive data:

- An employee might capture meeting notes and action items from an internal meeting about a new product that will disrupt the market. To summarize the notes for the executive team they may post the notes into ChatGPT asking it to create a summary from the notes.

- A software developer working on a company’s top product might copy and paste source code into ChatGPT asking it for recommendations to improve the code.

- An engineer may copy and paste log files submitted by an end user into ChatGPT asking it to write a root cause analysis report without scrubbing the log of usernames, IP address and system names.

Trellix's Data Loss Prevention (DLP) solutions are designed to monitor and safeguard organizations at both the endpoint and network levels. This comprehensive approach ensures that sensitive information remains secure, even as new technologies emerge. With Trellix's DLP solutions, organizations can rest assured that their most valuable crown jewels are protected from potential security breaches.

Leveraging Trellix Data Loss Prevention

Trellix Data Loss Prevention (DLP) offers a comprehensive collection of pre-defined rule set templates that can be used to rapidly establish DLP in any setting. Although these out-of-the-box templates may not satisfy all your DLP needs, they provide valuable guidance on how to protect data within your environment. In the case of AI services like ChatGPT and Google Bard, which present unique challenges for securing your environment, building the necessary rules can be accomplished in a matter of minutes. This allows you to promptly deploy the rules for testing, tuning, and enforcement in your production environment.

To safeguard your data from being leaked to these services, there are three types of DLP rules that can be utilized:

- Clipboard Protection: Monitor or blocks the use of the clipboard to copy sensitive data

- Web Protection: Monitor data being posted to websites

- Application Control: Monitor or block user access to websites

Before we create the rules you will need to first create definitions that will be used in the rules you plan to implement. For the use case in this article we will need to create the following DLP Definition Types:

- URL List: Used to define web protection rules and web content fingerprinting classification criteria. They are added to rules and classifications as Web address (URL) conditions.

- Application Template: Controls specific applications using properties such as product or vendor name, executable file name, or window title. An application template can be defined for a single application, or a group of similar applications. There are built-in (predefined) templates for several common applications such as Windows Explorer, web browsers, encryption applications, and email clients.

From the Trellix ePolicy Orchestrator (ePO) console navigate to DLP Policy Manager | Definitions

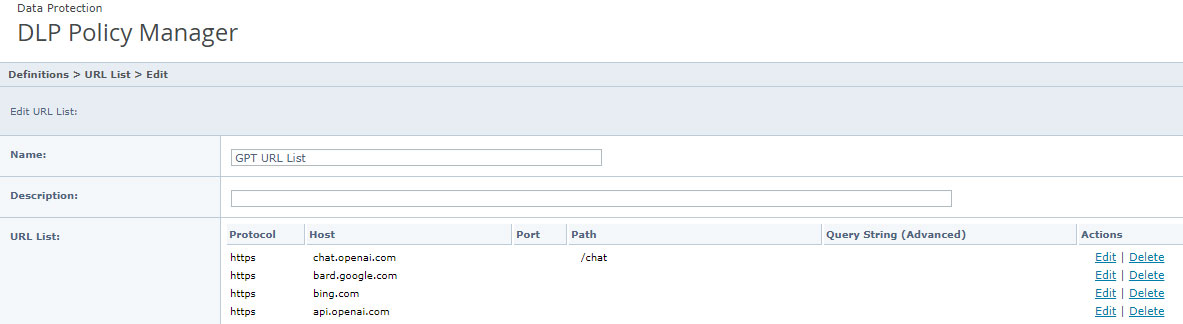

Destination URL

You will want to select the URL List category from the Source / Destination section and create a new URL list.

We will focus on:

- ChatGPT – https://chat.openai.com

- Google Bard – https://bard.google.com

- Bing AI – https://bing.com

- ChatGPT API – https://api.openai.com

- And any other LLM you’d like to add

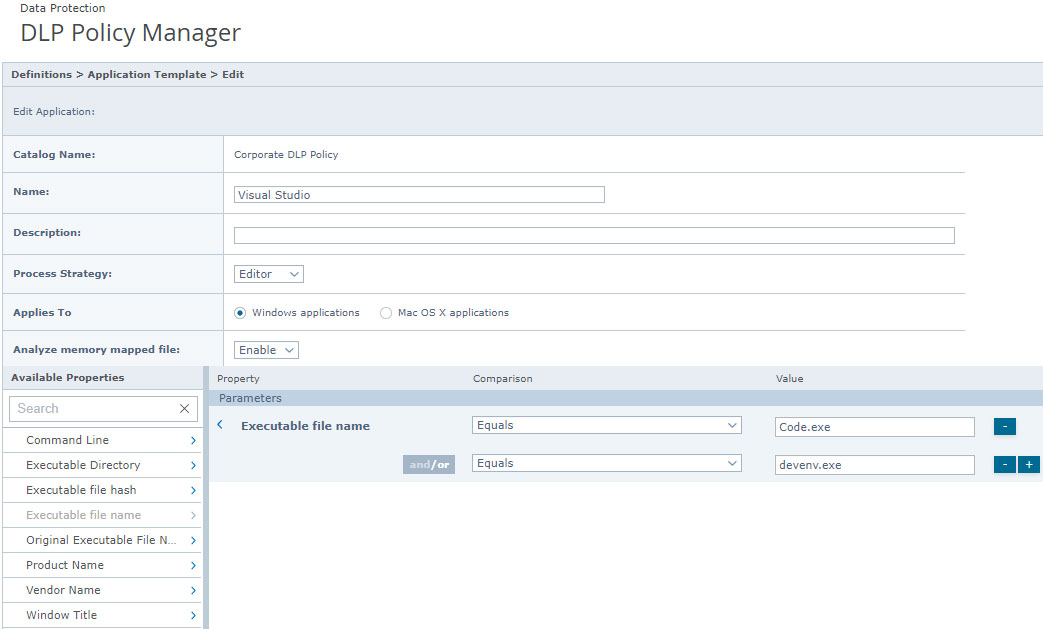

Source Application

You will want to select the Application Template category from the Source / Destination section and create a new URL list.

We will focus on:

- Visual Studio – devenv.exe

- Visual Studio Code – Code.exe

Once the definitions are created, you can begin building the desired rules for your environment. First, generate a new set of rules in the DLP Policy Manager, labeled 'GPT Monitoring and Blocking', and proceed to establish the rules that meet your requirements. Consider the following samples as a guide for crafting rules that address the specific use cases you are trying to solve. Before deploying these into a production environment Trellix highly recommends that they be thoroughly tested in a controlled environment to make any necessary adjustment to not adversely impact business operations.

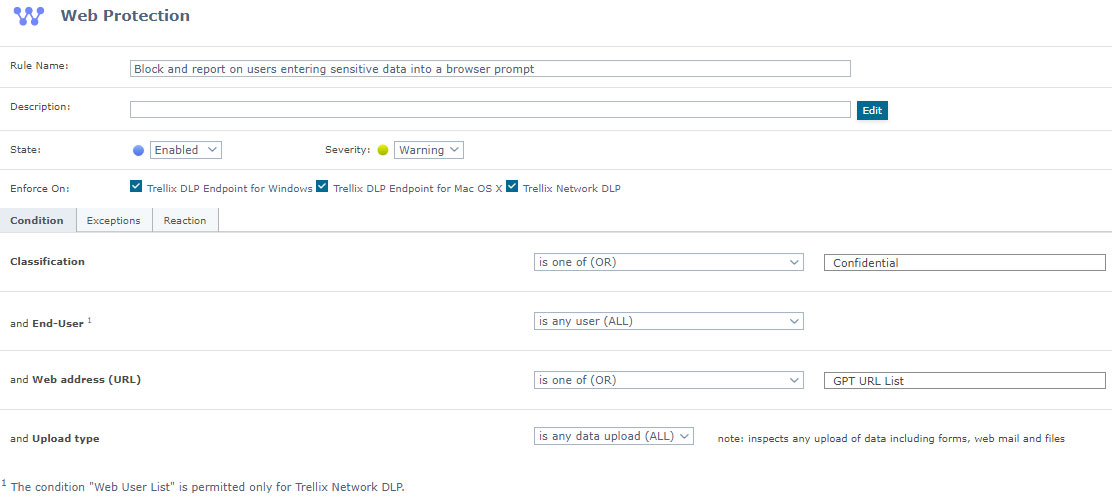

- A. Report on users entering sensitive data into a browser prompt

- Create a new web protection rule from Data Protection | Actions | New Rule | Web Protection

- The Condition for the rule should be:

- a. Enforced On: Windows and Network

(Note: For MAC reporting is only allowed)

(Note: Currently only supported with FireFox and not supported with Chrome or Edge to the section highlighted below:) - b. Classification: Select the content that you want to block from accessing the URLs

- c. End-User: All

- d. Web Address (URL): Select the URL list that was created in the previous step i.e., GPT URL List

- e. Upload Type: All

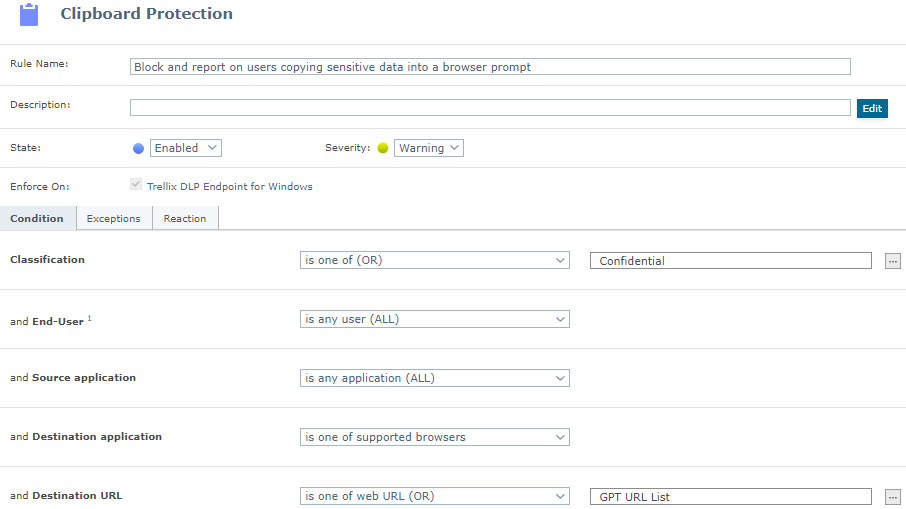

- B. Block and report on users copying sensitive data into a browser prompt

- Create a new clipboard protection rule from Data Protection | Actions | New Rule | Clipboard Protection

- The Condition for the rule should be:

- i. Enforced On: Windows

(Note: For MAC reporting is only allowed) - ii. Classification: Select the content that you want to block from accessing the URLs

- iii. End-User: All

- iv. Source Application: All

- v. Destination Application: Supported Browsers

- vi. Destination URL: Is the URL list that was created in the previous step i.e., GPT URL List

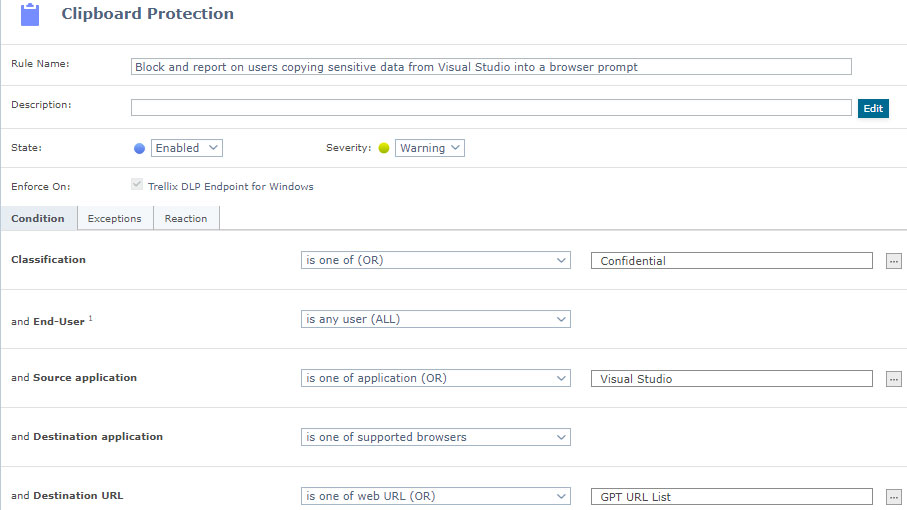

- C. Block and report on users copying sensitive data from Visual Studio into a browser prompt

- 1. Create a new clipboard protection rule from Data Protection | Actions | New Rule | Clipboard Protection

- 2. The Condition for the rule should be:

- a. Enforced On: Windows

(Note: For MAC reporting is only allowed) - b. Classification: Select the content that you want to block from accessing the URLs

- c. End-User: All

- d. Source Application: All

- e. Destination Application: Supported Browsers

- f. Destination URL: Is the URL list that was created in the previous step i.e., GPT URL List

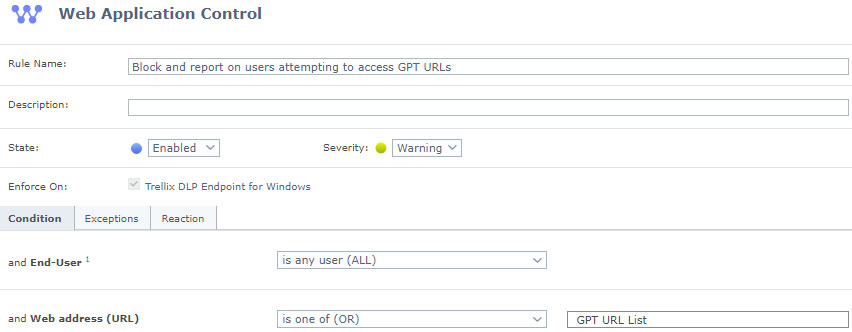

- D. Block and report on users attempting to access GPT URLs

NOTE: Web Application Control rule currently supports blocking only in Firefox; Chrome / Edge activities are monitored and reported only. - Create a new clipboard protection rule from Data Protection | Actions | New Rule | Clipboard Protection

- The Condition for the rule should be:

- a. End-User: All

- b. Web address (URL): Is the URL list that was created in the previous step i.e., GPT URL List

Once the new rule set has been created, it can be applied to systems within the environment. It should be noted, however, that these rules assume that the organization already has a data protection program in place, including tailored classifications and the newly created rules have been thoroughly tested in the environment before a production rollout. To fully benefit from these rules and ensure the security of sensitive data, companies must implement appropriate security measures to prevent unauthorized use of their data. While AI services like ChatGPT can provide significant advantages, it's important to balance these benefits with potential risks to cybersecurity.

If your organization doesn't yet have a data protection program in place, or if you're unsure how to implement the new rules, or if you're not currently a Trellix customer, it's recommended to reach out to your Trellix representative for more information on how Trellix can help secure your organization. Trellix has a long history of safeguarding sensitive data and can provide expertise and solutions to help mitigate cybersecurity risks.

RECENT NEWS

-

Jun 27, 2024

Trellix Named an XDR Market Leader

-

Jun 11, 2024

Trellix Uncovers Spike in Cyber Activity from China and Russia

-

May 13, 2024

Seven Trellix Leaders Recognized on the 2024 CRN Women of the Channel List

-

May 6, 2024

Trellix Secures Digital Collaboration Across the Enterprise

-

May 6, 2024

Trellix Receives Six Awards for Industry Leadership in Threat Detection and Response

RECENT STORIES

The latest from our newsroom

Get the latest

We’re no strangers to cybersecurity. But we are a new company.

Stay up to date as we evolve.

Zero spam. Unsubscribe at any time.